This is the post where I talk about my Ants bot and its development process. It finished in 71st place in the AI Challenge 2011. You can read about the contest details and the overall results in my other blog. Here is the complete source code in a zipped file. For a while I toyed with the idea of learning Python and using it to make a bot as the game engine and tools are themselves written in Python, but I realized that speed is huge in this challenge and Python is much slower than Java, so I switched back to Java which is still the language I’m most comfortable with.

Early Versions

The earliest version of my bot used lots of breadth-first searches (BFS) for food. I would search from all food tiles, stop when I find one of my ants and then order that ant to move towards the food. To save time, it also had the capability to save orders for ants. No point in having the ant do a new search every turn. Instead, at the beginning of each turn, it would go through the list of all saved orders, determine if the order was still valid (i.e. meaning that there’s still an ant at one end of the path and a food at the other end) and have the ant carry out the order. I also saved all my search results as a sort of cache of point-to-point paths. Searching through saved paths like this was way faster than doing a brand new BFS every time.

My exploration system was a crude hack but it worked surprisingly well. For each tile on the map, I simply kept a running total of how many times it has been visited by one of my ants, incrementing that total whenever one of my ants is standing on that tile. Then, when I had ants with nothing better to do and needed to explore, I would just have it look at its neighboring tiles and choose to go to the tile that has been visited the least number of times. I also wrote an exploration routine that uses A-star to pathfind towards tiles that I’ve never seen, but it worked too slowly so I abandoned this approach.

The only way to win a match is to kill enemy hills. At first, I only used a simple heuristics-based system to move my ants closer towards enemy hills that I could find. No real pathfinding involved. And of course no combat code at all. This was enough to get my bot into the top 1000, albeit just barely. I had a cutoff timer to prevent my bot from going over 500 milliseconds by checking the time left just before I did a new BFS, which is the only computationally intensive part of the code, but I would still time out on rare occasions. On very big multi-hill maps, the bot sometimes choked on the first turn too, due to the need to parse and process all the map data on the first turn, in addition to the normal BFS runs for food.

Minor Improvements

Obviously the bot’s biggest weakness was lack of intelligence when it came to tracking down and destroying enemy hills. It was around this time that I read this thread on the official forums, together with the paper it linked to about collaborative diffusion. Basically this approach would shift computation from ants to the map itself. Objects of interest would emit a scent, just like the old SimAnt game from Maxis, and ants just need a bit of logic added to make them seek out destination tiles with the strongest scent.

As a proof of concept, I quickly implemented a version of this just for seeking out enemy hills. I left out the collaborative part of the diffusion process, which involves the presence of your own ants damping the scent. This is because when it comes to attacking enemy hills, the more the merrier. I combined this with a very primitive combat AI that tries to map combat influence for my ants and enemy ants that I know about, and use this information to sort map tiles into zones that are safe to walk into and those that are forbidden. It was very crude mind you, as I couldn’t think of a better way than summing up the combat strength in a zone and comparing my strength to that of enemy ants.

Later, I noticed that using the diffused scent to guide my ants into enemy hills worked a bit too well, in that my ants would stupidly march into zones with massed concentrations of enemy ants and get themselves killed. So I added a bit of strategic logic to restrict the number of ants that were allowed to participate in attack missions and tried to turn attack mode on only if the bot thought that it had more ants than the enemy. Anyway, the combination of all these incremental improvements was enough to propel my bot into the 600 range.

Pure Diffusion Version

This experiment convinced me that diffusion works and that I should try making a version that does everything using scent maps. This means food gathering, exploration and seeking out enemy hills. Well, everything except actual combat. One problem with using diffusion compared to a more direct pathfinding algorithm is that the diffused scent must spread around far enough that an ant can sense it before it can do anything about it. For example, if a food is ten tiles away from the closest ant and the scent diffuses only one tile per turn, it will take ten turns for the scent to reach the ant so that it will start moving towards the food. The obvious solution is to diffuse many steps in a single turn but this is computationally intensive.

So to take full advantage of diffusion, I started my bot all over from scratch. I tried to make everything as lightweight as possible. Whereas the previous bots used lots of specialized objects, I tried to stick with primitives whenever possible and stored everything in arrays and hashmaps. This allowed me to do lots of data lookups very quickly. For example, instead of the default method of storing ants, both mine own and those of the enemy, as tile objects in lists, I created a two-dimensional array of the entire map and used integers to represent an item of interest at the appropriate coordinates.

I also decided to use two different scent maps instead of just one. One map would store food and exploration information. The scent values on this map are dampened by the presence of my own ants, so that an ant that is moving food will block its scent, discouraging other ants behind the first ant from moving towards the same food. The second map was used to store enemy hill scents, modified by the presence of known enemy ants. I wanted to create a reinforcement effect such that high concentrations of enemy ants would attract my own ants to move near them. The scent values on this map were not dampened by the presence of my own ants.

My testing showed that following these optimizations, I could perform over 300 diffusion steps while staying within the time limit. This is much more than necessary of course, and it would be nice to leave more margin for error and perhaps save some time for processing combat moves. Eventually I settled on a value of 100 diffusion steps for the food and exploration map and 50 diffusion steps for the enemy hill map. These values seemed to work well.

I also used trial and error to decide on the scent values of different objects of interest. Food for an example has a value of 1000 while tiles that I have never seen has a value of 500. In addition, my scent maps also keep track of which tiles are currently visible to my ants and give a small bonus to tiles that aren’t currently seen. This helps ensure that my ants spread evenly around the territory that I control so as to ensure that all tiles remain visible. This is very important as food spawns semi-randomly on the map and ants need to see newly spawned food as quickly as possible to snag it.

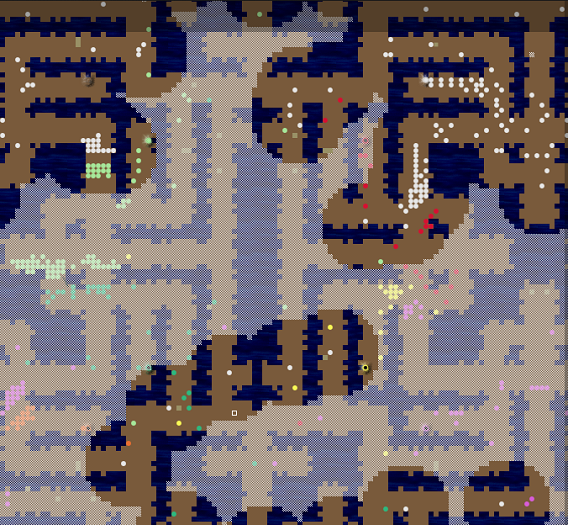

The results were very satisfying indeed. Even though this version of the bot had no combat logic at all, it could beat all previous versions of my own bots with perfect certainty simply by outgrowing them. It was highly efficient at gathering food and exploring and overwhelmed other bots through sheer numbers. Best of all, the diffusion system ensured a constant and predictable operation time independent of the number of my own ants. This meant no more time out problems. When I uploaded this version onto the official servers, I jumped straight into the 200+ range. Top bot status was finally within sight!

Combat AI

I experimented with adding the primitive combat AI I previously had into the diffusion version of the bot, but it didn’t seem to make much difference so I left it out. Just for the hell of it, I also wrote my first recursion routine to try to solve combat through brute force. I iterated through all possible moves of my ants that were in imminent danger of combat to try to select the best combination of moves. But even when I abstracted movement for opponent ants, either by assuming that they won’t move or that they move in all permissible directions simultaneously, the problem space was just too large to effectively explore. I actually caused the Java Virtual Machine to run out of memory when I tried to work out combinations involving ten ants or more.

Then about a week before the closing date of submissions, a1k0n who won the first Tron AI Challenge, started the ball rolling by posting in the official forums details of his combat strategy and answered any queries others had. His approach used a statistical sampling technique to selectively explore the vast problem space without needing to exhaustively iterate through every possibility. Later it turned out that others had used similar techniques, such as Ben Jackson’s simulated annealing. I did implement the sampling approach but I couldn’t get good results out of it. I’m not sure if it was because my evaluation function was flawed or if there were subtle bugs in my implementation.

But this post encouraged others to share their combat strategies including the very elegant and lightweight solution Memetix used and I adopted without much trouble. In a way, this approach is the natural culmination of my own very primitive efforts but I could never have reached it on my own. You really need to bend your mind and arrive at a deep understanding of the combat system to achieve what Memetix did.

Like my own system, this one mapped all combat strength values for all known ants. But Memetix understood that each ant needed to fight its strongest enemy, and so generated a map of how many enemies each ant would fight in every position that it could move to in one turn. This allowed him to create a map of tiles that are known to be safe, that would result in 1-to-1 kills, and that would result in your own ants dying without any enemy losses. Due to the basic similarity with my previous system, I was able to cannibalize my own code and implement Memetix’s system very quickly and it worked great!

In addition to avoiding danger spots, my own implementation also encouraged my ants to move in to kill enemy ants wherever it was possible to do so without danger. When I uploaded this version of the bot, its performance exceeded my wildest expectations and peaked inside of the top forty. Unfortunately, this was as far as I could go. I did later try to make some improvements. This system was still just an approximation of combat without simulating everything. It had a problem with coordinating ants. One ant might expect its partner to move in together with it to attack an enemy, but the partner might end up abandoning it, leaving it to die alone for example. As Memetix suggested, I recalculated the influence maps whenever an ant committed to a move so the other ants would know where it was moving.

Later I also tried to implement a way to ensure that no tile can be used as the centre of a combat zone more than once, but it didn’t seem to work well. At this time I was sick with high fever for nearly a week after participating in a walkathon, so my code was probably buggy and my efforts were more harmful than beneficial. I actually ended up uploading the wrong version of the bot just before the finals, which contained a subtle bug that would cause the bot to crash on rare occasions. I thought this would ruin any chance of me being in the top hundred but luckily I finished in 71st place anyway.

Final thoughts

Many others have since written posts about their strategy and released their source code, including the winner Xathis. It strikes me that using diffusion and and Memetix’s combat solution relies on emergent behavior. You can’t really predict how your ants might move in detail and it all depends on the local scent values and the combat influence maps. However, the bots in the top 10 used more deliberate moves. Xathis’ bot turned out to be very simple and straightforward. It basically used BFS to search for everything and used a conventional minimax technique to solve combat.

What truly stood out was that he was able to do it very efficiently through a combination of clever code (instead of manipulating the map using coordinates, he stored references to each neighbor tile inside every tile, making his BFS go much faster) and well thought out heuristics to prune the problem space. For example, if staying in place and moving in a couple of specific directions result in no combat, then it is necessary only to consider staying the place as the other two moves have the same result. This greatly reduced the number of possibilities he had to examine. In the same way, the other top bots used BFS to allow for greater, more precise control over their ants’ behavior. It truly astounded me that they were able to implement BFS so fast that hundreds of searches were possible while still leaving enough time to perform combat calculations.

Anyway, while my own bot is pretty crappy in comparison, once again I learned tons from this year’s challenge. I learned the importance of using the right data structures for the given task when performance is critical. I wrote my first recursion program. I learned all about pathfinding techniques even though the final version of the bot ended up not using it. In fact, I implemented many different things that ended up not being used. It was a very satisfying experience and great fun, better than playing any game in fact. I will definitely try to make participation in this challenge an annual ritual.

2 Responses to “Ants”

I love these posts about your entries in AI contests. They’re completely fascinating.

Wow, thanks for this comment. I really appreciate it. I mean I participate in discussions on the official forums, and certainly those folks are keenly interested in each other’s code and chosen strategies, but I was wondering if there was any interest at all in these long posts outside of that narrow audience. It’s very gratifying to learn that there are people interested in these posts.

Leave a Reply