The 2010 Google AI Challenge is now officially over and I’m very glad to have finished just inside the top 50. Not bad for someone with no formal education in programming and next to zero knowledge of Java before this. I learned a bit of JavaScript when I worked as a content writer for one of the very many companies in Malaysia that tried to make its own fortune during the original Dot Com boom. I basically learned Java just for the purpose of entering this contest and picked it because it seemed like the best supported language and uses syntax that’s not too dissimilar to that of JavaScript.

I never expected to do as well as I did. I am by far the highest ranking participant from Malaysia, though this is because there were so few participants. I tried to spread the word around on Facebook and LYN, and I think a few people joined because of that, but none of them made a serious attempt at writing a decent bot. Rather surprisingly, I was also the second highest ranking participant from any Asian country. Top Asian goes to oldman of China who showed up in the top 100 a few days after I wrote this post on my other blog. Other than us, there was one Korean in the top 100 and no less than five Indians.

First place overall goes to Gábor Melis of Hungary though the outcome was never in doubt. His bot bocsimacko was so much better than that of anyone else that it wasn’t much of a contest. It seems that he has a Master’s degree in AI and works in the field. Throughout the course of the contest, I’m pretty sure that I’ve won against just about everyone else in the top 10 at least once, but I’ve never won a single encounter against bocsimacko. One of my favorite battles is this one in which I’d gained a small advantage against him and he pulled off a masterful move to reverse the situation and beat me into the dust.

I spent a lot of time over the past month on this project so I’m going to write a couple of extensive posts on my experiences. This post will cover some basic strategies and how I gradually improved it over time. I’ll write another post later with step by step details of how the final version of the bot plays together with the source code. The game being played is called Planet Wars, adapted from the commercial game Galcon. It’s a very simple game so I’m not going to explain the rules. Anyone who watches the above video should understand how it works and if you need more detail, just read the official Problem Description and the full specifications.

Starter Bots

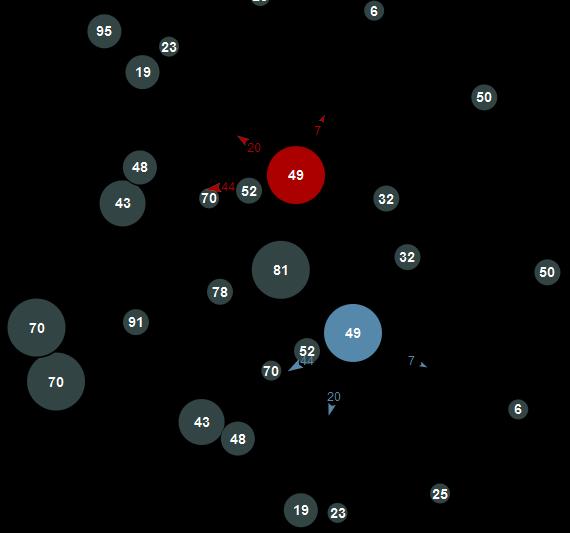

The starter packages provided for participants included a basic bot and a number of other bots to test against, with full source code for everything. The instructions on the contest website also included some basic and sensible suggestions about how to improve the default bot. Not surprisingly, many participants did exactly that and no more. Of the more than 4,600 total participants, about 100 were crashbots, meaning that the programs crashed immediately on the first turn and they were incapable of winning any games. Another 1,000 bots were basically unmodified starter bots. These bots were quickly cutoff in the final round of the tournament as there no point in wasting precious server resources to process games for them.

The default bot, called the ProspectorBot, had a very simple strategy. It tries to maintain only one fleet in space at a time. If it doesn’t already have a fleet in flight, it chooses the planet that it owns with the most ships (the strongest planet) and sends half of the ships there to the weakest planet on the map, regardless of whether it is owned by the enemy or is neutral. The suggested modifications made the bot more reluctant to send ships away from planets with high growth and made it prefer to attack enemy or neutral planets with high growth. It also increased the number of fleets it was willing to maintain by a hardcoded amount.

The bots to test against included the DualBot. This was an improved ProspectorBot which could change strategy depending on whether it was losing or winning. If it was losing, it increased the number of fleets it sent out, effectively a more risky tactic. If it was winning, it decreased the number of fleets it sent but used them to target only enemy planets. It was clearly the most sophisticated of all the included bots. Another one was the Randombot which as its name implies makes completely random moves. It was a very weak, easily defeated bot.

Finally, the package included the notorious RageBot. Many videogames have a concept called the Wall. One moment things are going swimmingly for you and the next thing you know, you run hard into a seemingly insurmountable obstacle. That’s the Wall. The Ragebot was the Wall for participants starting out in Planet Wars. Players would think of new ideas and strategies and happily implement them in their bots, only to see Ragebot smack them down. What made it more galling was that the more tricks you tried to pull off, the more sophisticated you think you’d made your bot, the easier it became for Ragebot to smack you down.

This is because Ragebot had a very simple and very pure strategy. It sends all of its ships out, beginning from the very first turn, and never keeps anything for defense. And it targets only enemy planets, never neutrals. This made it very effective against bots designed to expand as quickly as possible to multiple neutral planets. It could be easily countered by bots that always made moves while being aware of how vulnerable it would leave their own planets and by bots that were capable of moving ships between their own planets to provide for defense, but until participants figured out a way to beat it, it was the Wall.

My Early Bots

Like most people, I started out with the starter bot and made improvements to it over time. Just a few very minor tweaks, like increasing the number of fleets the bot was willing to send out at a time and making the bot favor close planets over distant ones when choosing a target, was enough to secure a place in the 1500s. Over time as I thought of more ideas, I added more tweaks. Sending out lots of fleets for example made the bot expand more quickly but also left already owned planets potentially vulnerable, so I made the number of fleets a function of my production rate, scaling up and down as appropriate.

More complex routines were added over time. I realized that the first turn in the game was critical and having the starting planets of both players very close together presented a particularly dangerous situation. So if the two planets started out very close, I made the bot wait and do nothing on the first turn to see what the opponent would do. If he chose to send out ships to expand, it would leave his home planet vulnerable to invasion and my bot would take advantage of it. If he had launched ships against me, I would be prepared to defend. Otherwise, I would just expand normally myself.

Efficient first-turn expansion was effectively a 01-Knapsack problem (no, I didn’t know this going in, this was one of the things I researched and learned over the course of the contest.) It was quite easy to find good solutions to this problem in Java on the net but after some fiddling I decided that I shouldn’t try to implement solutions that I don’t really understand. Instead I used a greedy approximation algorithm that was easy to conceptualize but probably slightly less efficient. I kept this even up to the final version of my bot.

The combination of all these improvements allowed me to get into the top 1000, stabilizing somewhere in the 800s but I’d hit my second Wall. My bot had tons of tweaks by now, but it was still recognizably based on the starter bot framework. To get any higher, I knew I had to do a major rewrite. I knew what to do. There were plenty of good ideas floating around on the official forums and in the QT3 thread that first brought the contest to my attention. The only problem was learning enough programming in time to implement these ideas.

Being one of the Top Bots

As far as I can tell, every single bot in the top 100 implements at least the following set of features:

- The ability to notice incoming enemy fleets and send fleets from surrounding friendly planets to defend.

- The ability to “snipe” neutral planets and defend against being sniped.

- The ability to route ships from backwater planets to the frontlines wherever it may be.

- The ability to create lists of many potential moves, assign a score to each and execute the best ones.

Planet Wars effectively favors the defender because during the time that an attacking fleet is in flight, the targeted planet has time to grow new ships to help in the defense. This means that attackers will need to have an overwhelming advantage to successfully wrest control of an enemy planet. This is also why defending is very efficient. The father the attacking fleet has to travel, the easier it is to defend against it as nearby friendly planets have more time to react to the invasion fleet and launch reinforcements in response.

Sniping refers to stealing neutral planets from under your opponent’s nose. Let’s say a neutral planet with a growth rate of 5 is being defended by 50 ships. The opponent therefore sends 51 ships to conquer it. If you are able to send 7 ships to arrive precisely on the turn following the conquest, you have successfully gained control of a planet for very little cost and made the opponent waste ships. Naturally, when you’re invading neutral planets yourself, you will want to avoid wasting ships on targets that are snipable by the opponent.

Sending ships to frontline planets is a good default action when your ships have nothing better to do. This puts the ships in a better position to defend or attack as appropriate. This can sometimes be a bad move as it might cause the bot to miss opportunities to expand to neutral planets in the backwaters but it’s an overwhelmingly good move in the vast majority of cases. Finally, many bots use some sort of prioritization system to evaluate the quality of various possible moves, including defense actions, sniping, invading etc. and choose the best ones to execute. I believe that one of the most important aspects of the game is choosing a good scoring system by which to evaluate all the different possible moves.

The first of these features I implemented was defense, which I think worked quite well. At first, my bot could only send reinforcements from one friendly planet to another friendly planet at a time to deal a specific perceived threat but I eventually found a way to enable multiple planets to support the same friendly planet. As for moving ships to the frontlines, I shamelessly stole the implementation that shangas (a fellow QT3 member from Finland who finished in 19th place) explained. However, I modified it slightly to move ships incrementally in stages rather than directly to the frontlines as this seemed to work better for me. This implementation simply defined the frontline as being the friendly planet that is closest to the closest enemy planet.

Defending against sniping was easy enough. I simply made sure that whatever neutral invasions I made were sure to turn a profit in ships even in worst case scenarios. But I had a very hard time writing a good sniping routine. It’s easy enough to launch a single fleet at a neutral planet and respond to that but what if the enemy launches multiple waves across successive turns? How do I allocate ships to snipe each wave and would doing so even be a good idea? Knowing that my solution was half-baked at first, I still submitted it and the combination of all these features got me into the 400 to 500 range.

The Top 100

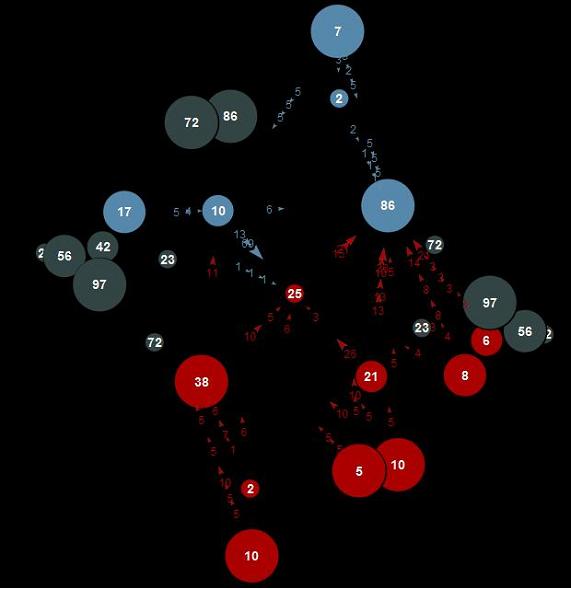

The problem of getting sniping perfect gnawed at me until I realized that it couldn’t be done without calculating all future states of all neutral planets and using that information to plan moves. While I was at it, I might as well calculate that for all enemy planets as well. I’d already known of this from Mistmanov’s extensive overview of his bot’s strategy on the official forums but the difficulty of implementing something that ambitious and processor-intensive was intimidating. Still, with no other room for improvement, I made a go at it, which necessitated learning some new data structures.

As my bot already had a pretty solid defensive strategy, I chose not to perform the future planet state calculations for planets that I owned. As per Mistmanov, I used the distance between the two planets farthest from each other on the map as my horizon and calculated who would own each planet that many turns into the future, based on known fleet movements and planetary growth rates. Using this information, I then calculated the value of fleet movements from each of my planets with available ships and picked the best move to take. Each time I issued a solid order, I also redid the future state calculation for the targeted planet to account for the new fleet movement. This helped keep processing time below the stipulated 1 second limit.

This added feature put my bot within spitting distance of the top 100. Then I had what is probably the most innovative idea of my own. While watching several matches, I notice that my bot often made rather bad moves and realized that it was because those moves, poor as they were, were still the best available at that time. I’d designed my bot to make moves only if it was certain of success, so it would invade an enemy or neutral planet only if it had enough ships to take control of it. So good moves that couldn’t be executed due to insufficient ships were discarded and poor moves were picked instead. The solution was obvious: if the best possible move at the time is still a poor one, the bot should do nothing at all and just wait. This change sent my bot comfortably into the top 100.

As the final deadline drew near, it was evident that the most serious competitors were constantly working on their bots. These revisions didn’t always work but the average quality of the top bots improved steadily. I made improvements of our own as well, but none were very dramatic. For example, I realized that reinforcements to the front frequently arrived too late. Instead of sending ships to where the frontline planets currently are, it would be smarter to send them to where you predict they will be when the reinforcements arrive. I also played around with some minor settings, like how willing the bot would be to make risky moves, but it was hard to tell what effects these changes had.

The final round

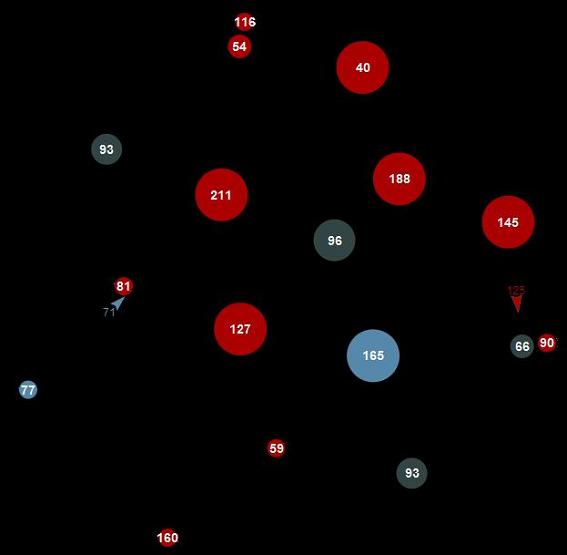

After the server stopped accepting new submissions, all previous results were wiped clean from everyone had to earn their rankings all over again. I had some nail-biting moments when I wondered if I would even make the top 100 as I had lost some matches along the way. Even after I did make it, it became clear that just as I suspected some competitors had held back their best designs until the last moment, so it would be impossible to reach my previous peak. Before the wipe, I’d stabilized in the 30s range and during the final rounds, I peaked in the 40s and almost fell out of the top 50s altogether.

I also noticed that my bot did much better on certain maps than others, so I would sometimes get a long string of defeats if the map rotation didn’t favor me. I did particularly badly on maps with tight clusters of planets spaced closely together as my defense algorithm doesn’t respond until after an enemy had been launched and it if the distances were too short, there wasn’t enough time left to respond anyway. Other players who had taken potential enemy movements into account, rather than just realized ones, did much better on these maps. My bot tended to favor maps in which the planets were more or less evenly spaced, and I could even beat top 10 bots on maps that favored me. The funny thing I never planned on any of this. It was just how things turned out to be.

There was a bit of a controversy on the official forums as the organizers had not finalized some specifications until very late. For example, there was doubt about what the turn limit would be fixed at up to three weeks before the deadline. The organizers also generated new maps based on the stated map generation scripts very late and some contestants were unhappy that they didn’t enough much time to test these maps. C# users also encountered time-out problems which never quite went away. But most of all, due to limited processing power, each bot could only have a limited number of matches and it was understood that the rankings weren’t to be considered to be perfectly fair and precise due to this. But overall, I think the organizers, all of whom were volunteers, did an excellent with limited resources and the results are reasonably fair given the circumstances.

Anyway, that brings this post to an end. I know it’s a very long post and I wonder how many people will even be interested, but I’m very happy with what I managed to accomplish and this is one way to keep a record of it. The next post will detail my bot’s internal logic in a step-by-step fashion and include its source code. I’d also like to list some of the improvements I’d have made if I had more time and cover a bit of what other strategies some of the top players have used.

Leave a Reply